Asymptotic Analysis : Big-Oh Notation

from Titas Dey

Been learning about asymptotic analysis.

Definition: Asymptotic analysis is the study of the growth of an algorithm’s running time or space requirement as a function of the input size n, for large values of n, while ignoring constant factors and lower-order terms.

In other words, we analyze how the resource consumption of an algorithm scales as the problem size becomes large.

Time Complexity != Time taken by the algorithm to run

Time complexity is a function f(n) that represents the number of elementary operations performed by an algorithm as a function of input size n ∈ ℕ.

T(n) = number of primitive steps executed for input size n

Asymptotic analysis is independent of

- Hardware specifications

- Programming language

- Operating system and runtime environment

- Input distribution (for a fixed n)

Big-Oh Notation (O-notation)

Definition: Let f(n) and g(n) be functions mapping positive integers to positive real numbers. We say that g(n) is O(f(n)) (read “g of n is big-oh of f of n”) if there exist positive constants c ∈ ℝ⁺ and n₀ ∈ ℕ such that:

g(n) ≤ c·f(n) ∀ n ≥ n₀

The function f(n) is called an asymptotic upper bound for g(n). Intuitively, this means that g(n) grows no faster than f(n), up to a constant multiplicative factor, for sufficiently large values of n.

Visually , if we plot both g(n) and f(n) and can find some point n₀ in the input axis beyond which c·f(n) always stays above g(n). Then g(n) is O(f(n)). i.e We use Big-Oh if we are concerned with the worst case performance of the algorithm

eg:

Say f(n) = 3n² + 5n + 10 , g(n) = n². g(n) = O(f(n)) & f(n) = O(g(n))

If we analyse this

We need to find constants c ∈ ℝ⁺ and n₀ ∈ ℕ such that:

f(n) ≤ c·g(n) ∀ n ≥ n₀

Substituting: 3n² + 5n + 10 ≤ c·n²

For n ≥ 1: – 5n ≤ 5n² – 10 ≤ 10n²

Therefore: 3n² + 5n + 10 ≤ 3n² + 5n² + 10n² = 18n²

Conclusion: Choose c = 18 and n₀ = 1. Then f(n) ≤ 18·g(n) ∀ n ≥ 1.

Hence, f(n) = O(g(n)) or more specifically, f(n) = O(n²).

Again (n) ≤ c·f(n) ∀ n ≥ n₀

Substituting: n² ≤ c(3n² + 5n + 10)

Since f(n) = 3n² + 5n + 10 > 3n² for all n ≥ 1, we have:

n² ≤ 3n² + 5n + 10

This is clearly true for all n ≥ 1.

Conclusion: Choose c = 1 and n₀ = 1. Then g(n) ≤ 1·f(n) ∀ n ≥ 1.

Hence, g(n) = O(f(n)).

Big-Oh Represents a Set of Functions

Say , some T(n) = n³ then

3n² + 5n + 10 = O(T(n))..................i

5n+7 = O(T(n)).........................ii

log(n) = O(T(n))_____iii

if we analytically approach eq(i),eq(ii),eq(iii). All 3 are True But eq(i) != eq(ii) != eq(iii)

When we write f(n) = O(g(n)), the ”=” is not a true equality.

More Precisely: O(g(n)) represents an infinite set of all functions that grow no faster than g(n). So f(n) = O(g(n)) really means f(n) ∈ O(g(n))

Example: O(n²) is the set containing functions like: – n² – 3n² + 5n + 10 – n² + 100n – 50n + 1000 – 5 (constant) – log n

All these functions belong to the set O(n²) because they all grow no faster than n².

When we write O(n²), we're saying that as the input size n becomes very large, the running time grows at most proportionally to n². The constant factors and lower-order terms become negligible in comparison to the dominant term, which is why we drop them in Big-Oh notation. For instance, an algorithm that takes 3n² + 5n + 10 operations is simply O(n²) because the n² term dominates as n approaches infinity.

i.e: We simply ignore the lower order and constant terms when were trying to find the time complexity in Big-Oh

Some Commonly Seen Cases

O(1) – Constant Time: These operations take the same amount of time regardless of input size. Accessing an array element by index, performing arithmetic operations, and returning a value are all O(1) operations. No matter if our array has 10 elements or 10 million elements, accessing the element at index 5 takes the same time.

O(log n) – Logarithmic Time: Algorithms that repeatedly divide the problem space in half exhibit logarithmic complexity. Binary search is the classic example, where we eliminate half of the remaining elements with each comparison. As n doubles, the running time increases by only a constant amount.

O(n) – Linear Time: When we must examine every element exactly once, we have linear complexity. A simple loop that processes each element in an array demonstrates O(n) behavior. If we double the input size, the running time doubles as well.

O(n log n) – Linearithmic Time: Efficient sorting algorithms like merge sort and heap sort operate in O(n log n) time. This complexity arises when we perform a logarithmic number of linear operations or divide-and-conquer with linear merging.

O(n²) – Quadratic Time: Nested loops where each loop runs n times typically result in quadratic complexity. Simple sorting algorithms like bubble sort and selection sort fall into this category. Doubling the input size quadruples the running time.

O(n³) – Cubic Time: Triple-nested loops often produce cubic complexity. Matrix multiplication using the naive algorithm is a prime example.

O(2ⁿ) – Exponential Time: The recursive Fibonacci function is a classic example, where each call branches into two more calls. The running time doubles with each increment of n, making it impractical for even moderately sized inputs.

O(n!) – Factorial Time: Generating all permutations of n elements produces factorial complexity. For n = 10, that's 3,628,800 operations. Yes calculated that in my head,absolutely did not google.

O(1) < O(log n) < O(n) < O(n log n) < O(n²) < O(n³) < O(2ⁿ) < O(n!)

Analyzing Some Algorithms

Example 1: Array Sum

def array_sum(arr):

total = 0

for element in arr:

total += element

return total

The loop runs once per element. Each iteration does constant work. Time complexity: O(n).

Example 2: Finding Maximum Element

def find_max(arr):

if len(arr) == 0:

return None

max_val = arr[0]

for i in range(1, len(arr)):

if arr[i] > max_val:

max_val = arr[i]

return max_val

Single pass through the array with constant-time operations per iteration. Time complexity: O(n).

Example 3: Bubble Sort

def bubble_sort(arr):

n = len(arr)

for i in range(n):

for j in range(n - i - 1):

if arr[j] > arr[j + 1]:

arr[j], arr[j + 1] = arr[j + 1], arr[j]

Nested loops result in roughly n² comparisons. Time complexity: O(n²).

Analyzing a Slightly More Complex Program

Example 4: Binary Search

def binary_search(arr, target):

left = 0

right = len(arr) - 1

while left <= right:

mid = (left + right) // 2

if arr[mid] == target:

return mid

elif arr[mid] < target:

left = mid + 1

else:

right = mid - 1

return -1

Initially, the search space has size n. After each iteration: – 1st iteration: n elements – 2nd iteration: n/2 elements – 3rd iteration: n/4 elements – kth iteration: n/2^(k-1) elements

The loop continues until the search space is reduced to 1 element or becomes empty. We need to find k such that:

n/2^(k-1) ≤ 1

Solving for k: – n ≤ 2^(k-1)

log₂(n) ≤ k – 1

k ≥ log₂(n) + 1

Therefore, the loop executes at most ⌈log₂(n)⌉ + 1 times (ceiling of log₂(n) plus 1).

Since each iteration performs O(1) operations (comparisons, assignments, arithmetic), the total time complexity is : T(n) = O(1) + O(log n)·O(1) + O(1) = O(log n) .

i.e T(n) = O(log(n)) for Binary Search

Conclusion:

- Big-Oh gives an upper bound on how an algorithm scales as input size increases

- We analyse for n → ∞

- Constant terms and lower order terms are ignored

print("Titas,Signing Out!")

Updated JSON

Updated JSON

How to check

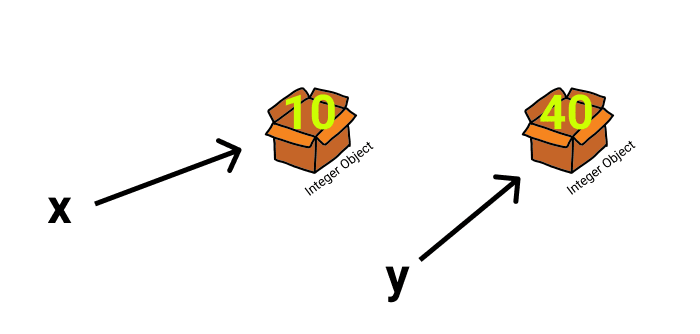

Again, we will use id() to see the ids of the objects.

How to check

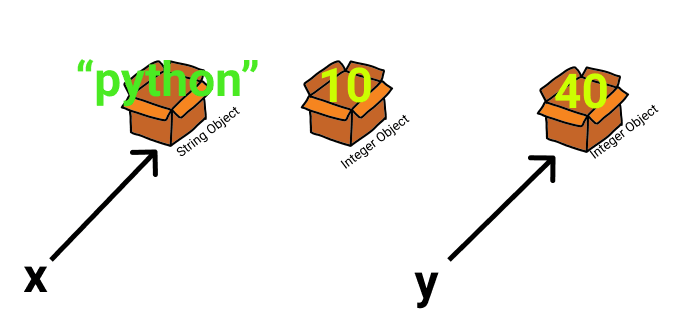

Again, we will use id() to see the ids of the objects. One last question: What if we create two integer objects with the same value?

One last question: What if we create two integer objects with the same value?