sh like infix syntax using Pipes(|) in Python

Today, we are going to see how we can use | operator in our python code to achieve clean code.

Here is the code where we have used map and filter for a specific operation.

In [1]: arr = [11, 12, 14, 15, 18]

In [2]: list(map(lambda x: x * 2, filter(lambda x: x%2 ==1, arr)))

Out[2]: [22, 30]

The same code with Pipes.

In [1]: from pipe import select, where

In [2]: arr = [11, 12, 14, 15, 18]

In [3]: list(arr | where (lambda x: x%2 ==1) | select(lambda x:x *2))

Out[3]: [22, 30]

Pipes passes the result of one function to another function, have inbuilt pipes method like select, where, tee, traverse.

Install Pipe

>> pip install pipe

traverse

Recursively unfold iterable:

In [12]: arr = [[1,2,3], [3,4,[56]]]

In [13]: list(arr | traverse)

Out[13]: [1, 2, 3, 3, 4, 56]

select()

An alias for map().

In [1]: arr = [11, 12, 14, 15, 18]

In [2]: list(filter(lambda x: x%2 ==1, arr))

Out[2]: [11, 15]

where()

Only yields the matching items of the given iterable:

In [1]: arr = [11, 12, 14, 15, 18]

In [2]: list(arr | where(lambda x: x % 2 == 0))

Out[2]: [12, 14, 18]

sort()

Like Python's built-in “sorted” primitive. Allows cmp (Python 2.x only), key, and reverse arguments. By default, sorts using the identity function as the key.

In [1]: ''.join("python" | sort)

Out[1]: 'hnopty'

reverse

Like Python's built-in “reversed” primitive.

In [1]: list([1, 2, 3] | reverse)

Out[1]: [3, 2, 1]

strip

Like Python's strip-method for str.

In [1]: ' abc ' | strip

Out[1]: 'abc'

That's all for today, In this blog you have seen how to install the Pipe and use the Pipe to write clean and short code using inbuilt pipes, you can check more over here

Cheers!

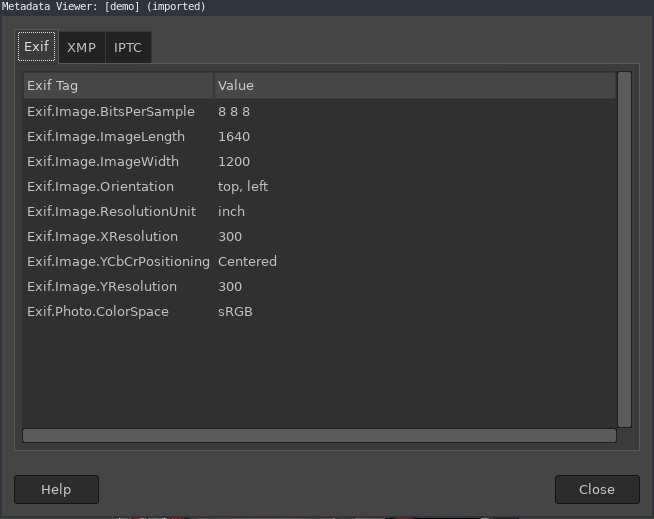

Image metadata from GIMP tool

Image metadata from GIMP tool Above image is taken from this

Above image is taken from this  Image with different rotation

Image with different rotation